A couple of weeks ago (February 6th, 2021) I was attending Virtual Tech Meetup Event 6 hosted by @Sherif in which there was a talk by Hesham Ismail (Engineering Manager at Facebook) about the Importance of Measurement and Hesham talked about the concept of A/B testing which was completely new to me.

Also, the concept of A/B testing in code and the usage of Feature Toggles (aka Feature Flags) which is discussed by Martin Fowler in his book " Refactoring and Improving the Design of Existing Code", all of this was a trigger for me to start investigating this topic.

I started exploring and reading many articles/videos discussing the concept of A/B in-depth and why we may need it, the flow of running A/B experiment and what is Feature Flags and how to use it, also the tools we need in order to achieve our goal.

Then I decided that it would be better to write a form of summaries or personal notes so to speak based on the articles and videos I have watched.

In this part, I will be discussing A/B testing as a concept, how it works, comparing the results to get your final decision, and a use case for A/B testing. So without further ado, Let's Get It Started

What is A/B Testing

In the online world, the number of visitors on your website equals the number of opportunities you have to expand your business by acquiring new customers so any update on your website may affect the number of visitors and their experience using your website.

So estimating and collecting the data about the effect of your changes on the users will help you taking more data-informed decisions that shift business conversations from "we think" to "we know" By measuring the impact that your changes have on the specified metrics.

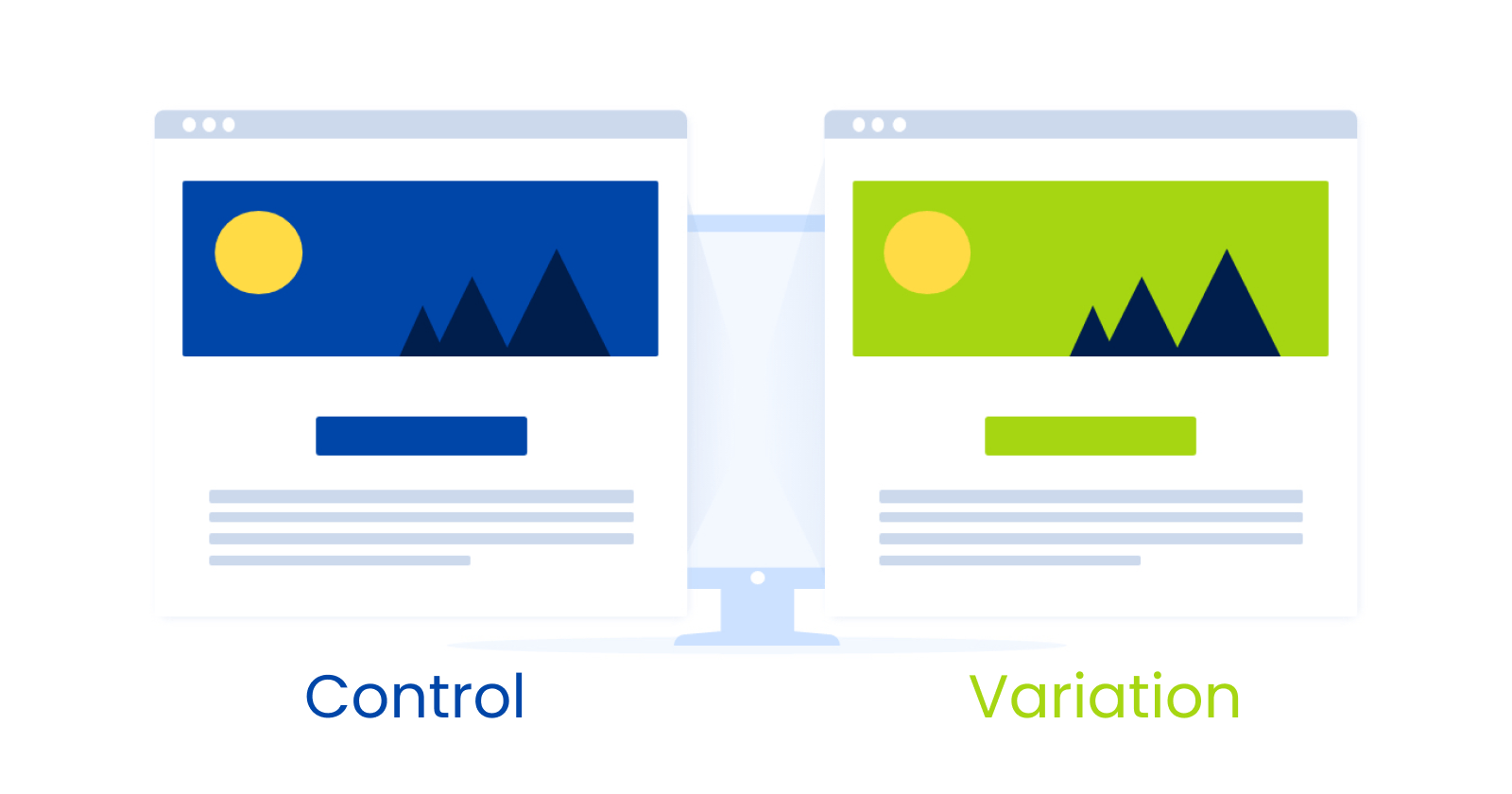

A/B testing, (aka. split testing) refers to an experimental technique to determine whether a new design brings improvement/enhancements, according to a chosen metric or not.

In web analytics, the idea is to challenge an existing version of a website (A) with a new one (B), by randomly splitting traffic and comparing metrics on each of the splits using statistical analysis to determine which variation performs better for a given conversion goal.

How A/B Testing Works

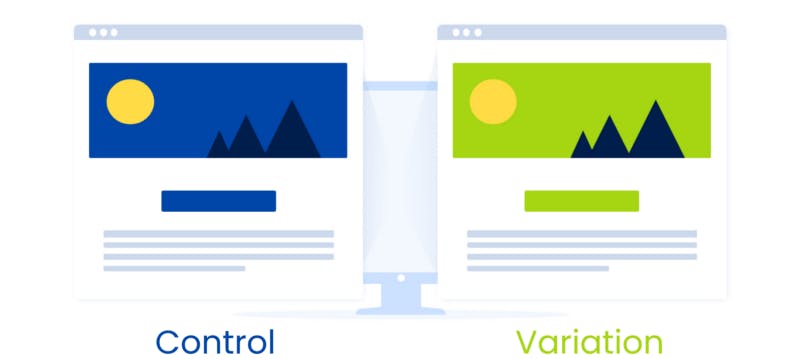

In an A/B test, you take a webpage or app screen and modify it to create a second version of the same page, this change can be as simple as a single headline or button or be a complete redesign of the page.

Then, we segment our traffic into A (the control) and B (the variation) segments such that half of your traffic is shown the original version of page (A), and half are shown the modified version of page (B)

✋ Note:

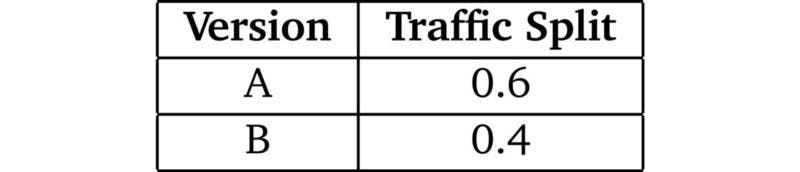

You can choose the split of traffic not to be 50–50 and allocate more traffic to version A if you are concerned about losses due to version B.

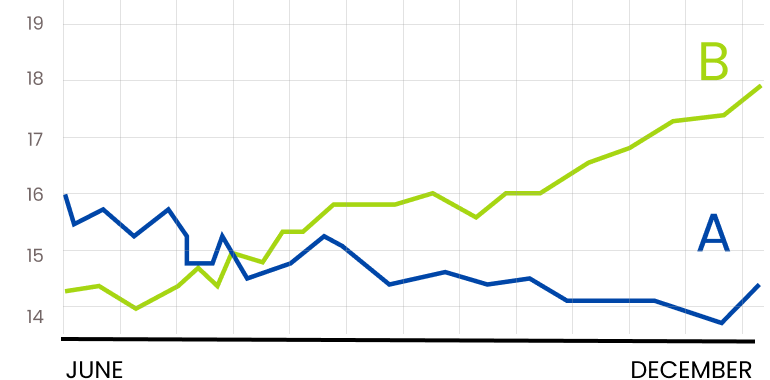

Comparing results

As visitors are served either the control (A) or the variation (B), their engagement with each experience is measured and collected in an analytics dashboard and analyzed through a statistical engine.

You can then determine whether changing the experience had a 🔻Negative (Null Hypothesis), 🔺Positive (Alternative Hypothesis), or No effect on visitor behavior.

The use case

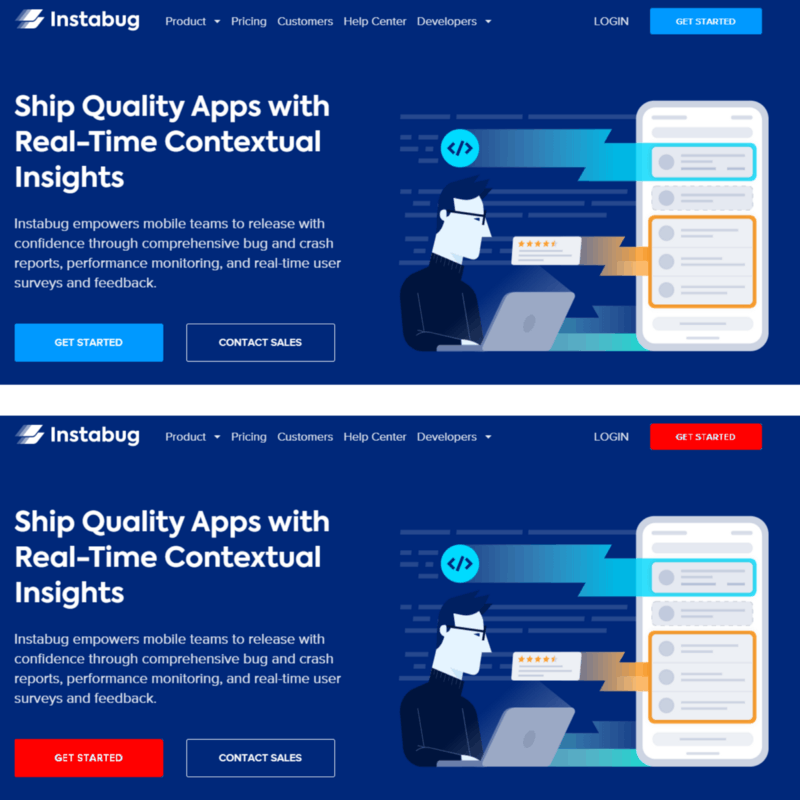

Let us assume that @Instabug is considering an update for CTAs color on the landing page from 🔵 blue to 🔴 red and we will assume that the metrics that matter to Instabug are:

1. Average time spent on the landing page per session

2. Conversion rate, defined as the proportion of sessions ending up with a transaction

A/B testing can be useful for our case to test whether our new update for the CTAs color brings improvement in our metrics mentioned above or not by following the same steps mentioned above in the section of "How A/B testing work"

Conclusion

The A/B test measures performance live, with real clients. Provided it is well-executed, with no bias when sampling populations A and B, it gives us actual results and data that helps us know the best estimate of what would happen if you were to deploy version B.

In the next part, we will be discussing the idea of A/B testing in code and how to use Feature Toggles (aka Feature Flags) for your upcoming features

References

3. Advanced-Data Analysis Nanodegree Program (A/B tests)

4. Episode[20]: A/B Testing With Kareem Elansary from Booking.com (Arabic)